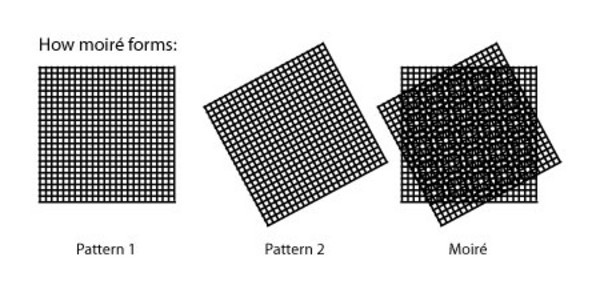

We are shooting some of the more intensive objects such as: architectural decoration, textiles, display screens, etc., we will see some inexplicable color stripes seriously affect the imaging effect of the proofs, these have dense and repeated details proofs The color stripes that appear are the so-called moirés. How did this moire come about? How should we avoid these moirés in proofs? Let us go into science.

The more common explanation for Moiré is that in some digital cameras, scanners and other devices, high frequency interference has occurred in the process of shooting and scanning, and irregular stripes of colors and shapes have appeared on the pictures. The principle is to superimpose equal amplitude sinusoidal waves close to two frequencies with two frequencies close to each other, and the amplitude of the synthesized signal will change according to the difference between the two frequencies. If the spatial frequency of the pixel inside the photosensitive element is close to the spatial frequency of the fringes in the image, moire can easily occur. If you want to avoid these moirés on the hardware level, you should use the spatial resolution of the lens is much smaller than the spatial frequency of the photosensitive element to avoid the formation of moiré.

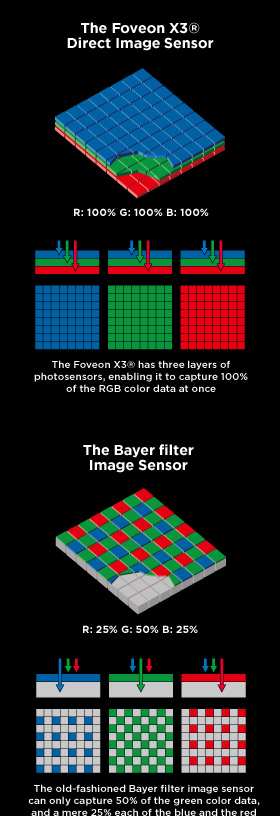

However, not all cameras on the market have such a large unit pixel area, so some low-end cameras using Bayer array sensors often choose to add a low-pass filter in front of the sensor to reduce the moiré. However, the pros and cons, low-pass filter will affect the details of the imaging, although the impact on the SLR is not very serious, but the number of Mao party and the sharpness of the party is the heart of 10,000 unhappy.

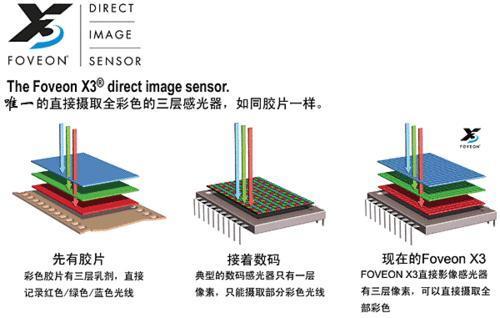

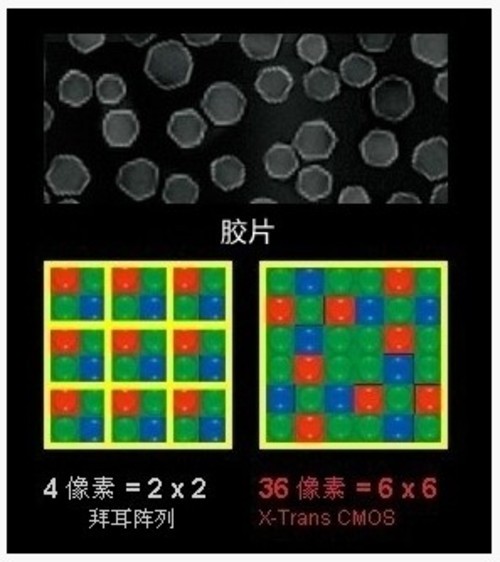

In addition, some camera manufacturers are also developing some photosensitive elements that can avoid such moire, such as Sigma X3 and Fuji X-Trans sensors. This has also been mentioned in another article by Xiao Bian, "Watching Sony's New RGBW Sensor's "W". At that time, due to the fact that it was a bit ridiculous and I didn't introduce it, I would like to introduce it here.

Sigma's X3 sensor is completely different from the traditional Bayer array sensor architecture. The traditional Bayer array sensor is designed as a one-layer RGBG filter. Sigma's X3 is similar to the three-layer design of the film. The sensor is covered with three layers of BGR. Pixels are superimposed, like three layers of emulsion on a film, except that the film has no such thing as a pixel. Each pixel of the X3 sensor can sense three colors of RGB, thus completing color matching data on the same pixel, and eliminating the need to use "guessing colors" (anti-mosaic operation) technology similar to Bayer arrays. To fill in the color of nearby pixels. Since the process of “guessing color†is omitted directly, the interference of pixel colors between Sigma X3 is equal to nothing, and it is better for the accuracy of color reproduction. However, due to the use of three pixel filter stacks, the attenuation of light is also a problem, especially in the bottom of the red pixel filter will lose, but the outdated friends shoot straight out of the color, it seems that the problem is also Not much, and many people will choose post-processing after shooting. The adjustment of colors depends on your own preferences.

The other is the Fuji X-Trans sensor, which is based on a traditional sensor based on Bayer array sensors. The traditional Bayer sensor is arranged with 2 × 2 RGBG, and the X-Trans sensor is arranged with 6 × 6 pixels. . The X-Trans sensor's RGB pixel filter sequence was inspired by the film's silver salt particle disorder, and the sensor's color filter array was modified to add a simulated disordered array. In terms of disorder, it is still impossible to see a certain order of rules, but the repetition frequency of 6 × 6 large intervals with a regular direction is indeed much smaller than that of Bayer arrays, so this array method is also Can greatly reduce the generation of moire. The green filter in the X-Trans sensor will be more, and the official statement is that the human eye is most sensitive to green, so adding more green to make the colors more realistic (that is, more beautiful and more fitting to the human eye).

The two new sensors introduced above have eliminated the low-pass filter directly, making the details of the proofs more sharp, and the application of new technologies can also reduce or even eliminate the generation of moire.

Take the Canon 70D as an example. Even if you add a low-pass filter, there will be more moiré when shooting the screen. This is because the RGB backlight pixels on the screen are more uniform and repeatable. To solve it is also possible, that is, a little defocus, so that the focus is slightly less accurate on the pixel point, you can reduce the moiré situation, but of course the situation is that the details of the proofs are not clear enough, but from the perspective of perception A little bit better than full-screen Moorish. This technique is not limited to cameras. It is also effective for mobile phones, especially for phones that support manual focusing.

In addition to this way of tossing, it is also possible to reduce the moire by changing the angle of the camera and tilting the camera. Also change the position of the camera, take a few more spots to observe the Moorish, and where there is a small Mole pattern and can accept this composition, you can shoot it.

In addition, the rich, or directly consider the choice of a new camera or full-frame camera without low-pass filter to reduce the generation of moire (bracket laugh).

![<?echo $_SERVER['SERVER_NAME'];?>](/template/twentyseventeen/skin/images/header.jpg)