Autopilot is not a peace of mind, and people are still an integral part of it.

Typically, the gossip topic at the Penn State University's ergonomics lab is a video of lecture reminders and wall climbing robots. But after the first case of the Autopilot death of the Tesla automatic assisted driving system in the spring of this year, the following critical comments became mainstream:

Research and development: One of the inferiority of the designer is that the product has been declared to meet the requirements before the product is completed.

Postdoctoral Fellows: These failures are inevitable until the technology is perfected. Technology pioneers such as Tesla Motors are always receiving additional attention.

Graduate: If the driver can concentrate on it, a trailer will not be treated as a traffic sign. These discussions have continued for many days, and the views of all parties seem to have their merits. Therefore, the author hopes to understand why this largest-scale human-computer interaction experiment has failed in this unfortunate accident.

According to the accident record, the Model S, driven by an Ohio male driver, collided with a trailer on the 27A highway in Florida. At that time, the car failed to distinguish the high-light sky from the white-painted truck, so the emergency braking system did not start. This is the cause of the technical aspects of the accident. But for car safety experts and Tesla, the more important reason is that the driver failed to predict the accident and step on the brakes.

At a time when autonomous cars are on the road, let us re-examine some of the misconceptions about smart machines and robots - many people imagine KITT, an artificial intelligence car partner in the movie "Ranger Ranger." Although it is only a novel, people have become prejudiced, and even the automatic cleaning robot like Roomba and the automatic dishwasher that has been widely used have consolidated this view.

Autopilot is not as smart as KITT, and its operating manual clearly states that people must be one of its components (re-intelligent machines require us to read at least the operating manual). Tesla Motors publicly stated that Autopilot software is still a beta version yet to be perfected and warns drivers to stay alert and not to leave their hands on the steering wheel.

But the sensitivity is that Autopilot's technology development and marketing are out of touch. When Tesla Motors announced its software system update in October 2015, its blog post titled "Automatic Driving Comes" makes people feel that robot drivers can drive and pick up passengers. In the company's Chinese promotion copy, this feature was directly translated as "unmanned." Therefore, a driver claimed that he was misled and caused an accident.

Early Autopilot users contributed to the misuse of this technology. In YouTube, people often watch videos of people driving away from their hands, and even people play checkers and build blocks while driving. In a professional review video that hundreds of thousands of people watched, the creator just added a simple reminder - "Disclaimer: ... The operation in this video is intentional. Security is the primary consideration. Look Drive on the road, don't do stupid things--that is, "Don't do what we just did."

The missing concept is that shared control is the basis of autonomous driving. This symbiotic relationship is common in fighter pilot training. Since President Carter's time, professionals have used a general term, fly-by-wire, to describe any computer-aided flight control. Just like Autopilot, the telex operating system is just an assistive technology to strengthen, not replace the pilot's control of the aircraft. Before the pilot controls the cockpit, through years of training, the pilot clearly understands how the computer collects and processes information. They also learned that even when they have assisted driving functions, they need to be vigilant and ready to take over flight control instead of letting the computer control everything.

Because ordinary drivers cannot receive in-depth training like pilots, car manufacturers must find effective solutions. For example, when installing driver assistance software, first-time users need more than just instructions on how to use the software. Instead, they should be short-term training courses to help drivers understand how autopilot works. It is designed to handle and not process. What kind of application and why the driver needs to be ready to intervene.

Mica Endsley, a former US Air Force chief scientist, said that the problem with automation technology such as Autopilot is that when errors occur, the operator is generally outside the control loop, delaying the detection and correction of the problem. .

In addition to driver training, autonomous driving software should also be designed to consolidate training outcomes. Software engineers and automotive engineers need to understand how their design communicates better with users by understanding their behavior and cognitive patterns. At present, academics and automakers have also noticed this new field and have begun more and more research on interpersonal interaction. For example, Stanford University's interaction design experts are investigating how to make assessments and predictions generated by autonomous vehicles' cameras, radars, and sensors more transparent to users. The experts said that the car manufacturer should take voice prompts ("there are obstacles in front, braking") and physical tips (such as changing the tilt of the steering wheel when the driver needs to take over control) and other measures to let the driver know the changes in the road conditions (such as trucks). Block the road) or avoid accidents caused by the driver's daze.

In the current product design, the signal prompts still need to be strengthened. For example, the Model S only uses the pitch change and the color change of the Autopilot control panel to remind the driver to take over the control. Cadillac's SuperCruise system and Volvo's Pilot Assist system are reminded by the gentle vibration of the seat or steering wheel. The depot needs to be more aggressive in assisting drivers, such as the multi-sensory approach suggested by Stanford University, using both steering wheel vibrations and voice and flashing lights.

In this era of instant messaging software, connected maps and mobile phone trading, no one wants to lag behind in the application of new technologies. But when using autonomous vehicles, drivers still need to be alert and focused. This new technology requires both training of humans and the training of intelligent alarm systems.

The Autopilot fatal accident in May may be just one of the worst cases, but it emphasizes the importance of how people and machines share car control.

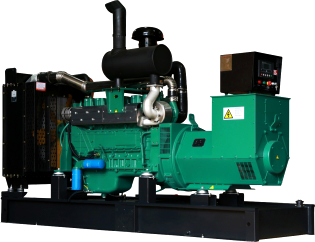

Diesel gensets are fully used in many important fields such as telecommunications, highways, skyscrapers, hospitals, airports, armies and factories. Our genset includes CUMMINS diesel gensets, PERKINS diesel gensets, LOVOL diesel gensets, DEUTZ diesel gensets and china-made diesel gensets, like WEIFANG diesel gensets, YUCHAI diesel gensets and so on.

Our company has got the independent department of product development and the production base. The processes of products` development, producing and selling and our Diesel Generatorservice are well managed according to ISO9001 Quality Management System, and we provide high-quality products and satisfying service.

Our diesel gensets conform to ISO8528 international standard and GB2820 Chinese standard, and the exhaust gas discharges is up to Europe â…¢.

Soundproof diesel generator sets are usually used in the environments wth stringent requirements for noise such as hospital,school,banks,hotels or other commercial sites.The soundproof generator set is made of the standard unit with mute cover.The mute cover can be removed and facilitate the care and maintenance.

Diesel Generator,Portable Diesel Generator,Silent Diesel Generator,Home Generators

FUZHOU LANDTOP CO., LTD , https://www.landtopcos.com

![<?echo $_SERVER['SERVER_NAME'];?>](/template/twentyseventeen/skin/images/header.jpg)